From Pilot to Production: Operationalising Generative AI in Engineering

Over the years, we’ve interacted with several leaders in the engineering industry. They all agreed on one common idea - scaling sustainable engineering today is not just about building smarter machines. It’s about building smarter ecosystems. As these leaders focused on meeting rising demands for efficiency and decarbonisation, they experienced real breakthroughs when they unified IoT-powered field data, Sustainability Cloud insights, and CRM Analytics into a single Salesforce-driven backbone.

There was a time when Generative AI was an experimental novelty. Today, that’s not the case. Engineering teams across the globe have enthusiastically embraced GenAI pilots. From enhancing product designs to simulating engineering scenarios and everything in between, its applicability is quite broad. Yet despite this rapid surge in experimentation, only a handful of these pilots ever make it to full-scale production. In other words, the gap between ideation and implementation isn’t shrinking; it’s widening. This results in the GenAI paradox.

GenAI Paradox: What exactly is it?

Across industries, organisations are investing aggressively in proofs of concept and sandbox GenAI experiments. Engineering teams are eager to test new models and discover innovative applications. Yet despite this intense burst of activity, only a small fraction of these initiatives ever progress beyond the pilot phase.

This is the GenAI paradox: while experimentation is at an all-time high, operationalisation remains remarkably low. Enterprises, particularly in complex, compliance-heavy sectors like engineering, urgently need faster development cycles and sustainable competitive differentiation. However, instead of production-ready GenAI systems that deliver measurable business value, most organisations are left with compelling demos that never reach scale.

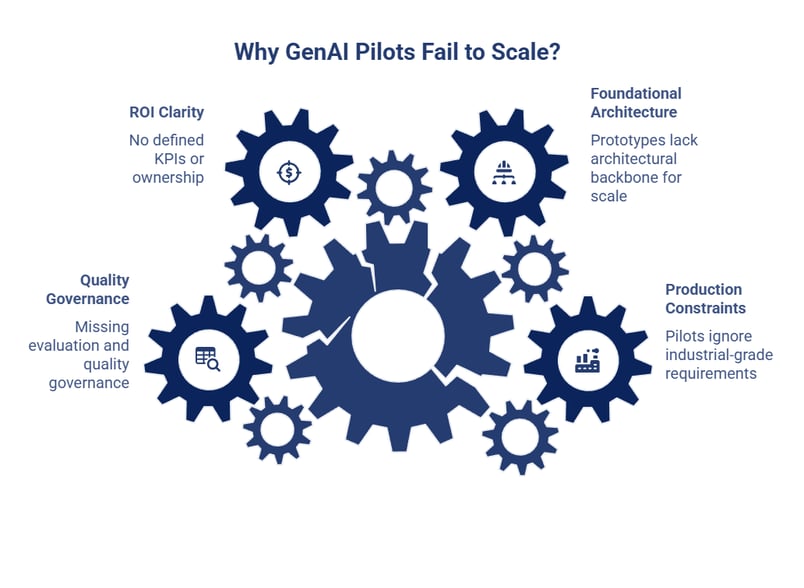

Why Most GenAI Pilots Fail to Scale?

It’s quite simple. The problem isn’t a lack of use cases. It’s the absence of operational rigor. Most prototypes are built like experimental showcases. They are disconnected from the realities of industrial systems and regulatory frameworks. What starts as an exciting concept never matures into a dependable, plant-ready capability. Apart from this, here are some additional reasons why most GenAI pilots fail to scale:

1. Pilots Built Without Production Constraints

GenAI models are often designed in isolated innovation labs. They do not consider industrial-grade requirements such as environmental compliance, cybersecurity standards for OT/IT systems, traceability expectations, or integration with SCADA, ERP, or supplier ecosystems. When it’s time to deploy in a live plant or grid-connected environment, the pilot collapses under constraints it was never engineered to handle.

2. One-Off Prototypes Lacking Foundational Architecture

Prototypes in engineering companies frequently miss the architectural backbone needed for scale. No APIs for connecting to energy asset platforms. No lineage or monitoring for model outputs. No access controls to protect emissions data or proprietary designs. Without these foundations, operations teams cannot industrialize the solution.

3. No Clarity on ROI or Sustainability Ownership

Many GenAI pilots begin as “innovation experiments”. There are no defined KPIs related to efficiency gains, resource optimization, carbon reduction, or safety improvements. Without ownership from engineering and operations teams, and without measurable impact, pilots stall at the proof-of-concept stage.

4. Missing Evaluation and Quality Governance

GenAI performance cannot be assessed like traditional software. Organisations fail to define guardrails for hallucination risks, model drift tied to changing asset conditions, or the accuracy thresholds required for regulatory reporting. Without continuous evaluation loops, models become unreliable. This erodes trust across engineering and field operations.

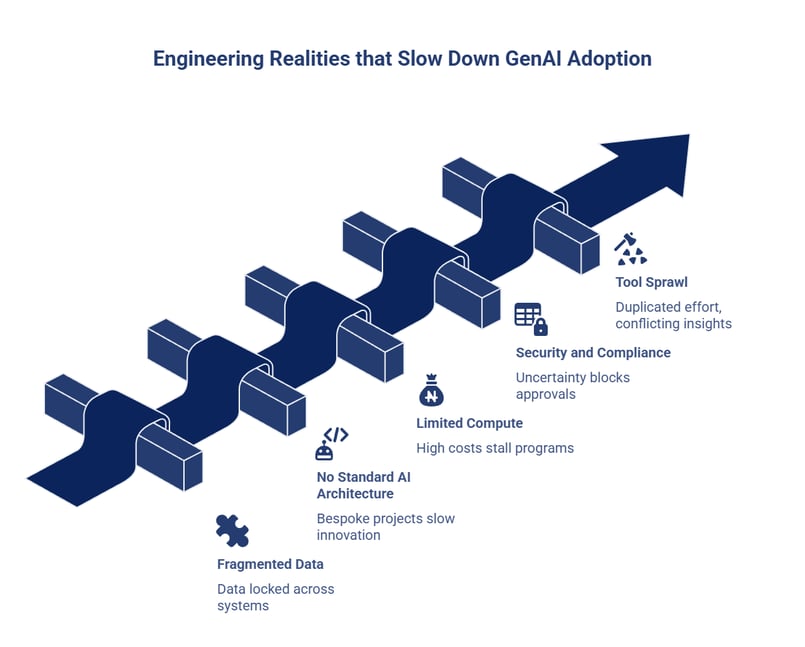

Engineering Realities That Slow Down GenAI Adoption

GenAI thrives on agility, while engineering ecosystems are built for compliance and predictability. Here are some harsh engineering realities that slow down your GenAI adoption:

1. Fragmented Operational Data and Legacy OT Systems

Engineering firms run complex energy assets and field operations powered by SCADA, PLCs, and OT systems. These were never designed for LLMs. Data is locked across plants and suppliers, making model training and contextual reasoning nearly impossible without costly integration projects.

2. No Standardized AI Architecture or MLOps for Industrial AI

Most sustainability-led engineering organizations lack a unified architecture for deploying AI models across plants and supply chains. Every GenAI use case becomes a bespoke project. New pipelines, new governance rules, new deployment patterns….all slow down innovation and consume scarce engineering bandwidth.

3. Limited Compute Strategy and High Cost Sensitivity

Unlike digital-native firms, engineering companies operate under tight capex controls and energy-efficiency mandates. GPU sourcing and inference costs clash with sustainability targets and financial scrutiny. This causes GenAI programs to stall at the budgeting and procurement stages.

4. Security and Compliance Barriers Rooted in Safety and IP

Green engineering involves proprietary designs, emissions data, equipment telemetry, and safety protocols governed by environmental and industrial regulations. GenAI’s probabilistic nature introduces uncertainty. This is something that audit, EHS, and compliance teams cannot tolerate. As a result, approvals move slowly or get blocked entirely.

5. Tool Sprawl Across Projects and Functions

Different business units experiment with standalone copilots, SaaS AI tools, and vendor-provided assistants. With no shared governance or model oversight, these tools create duplicated effort, conflicting insights, and unmanaged sustainability claims.

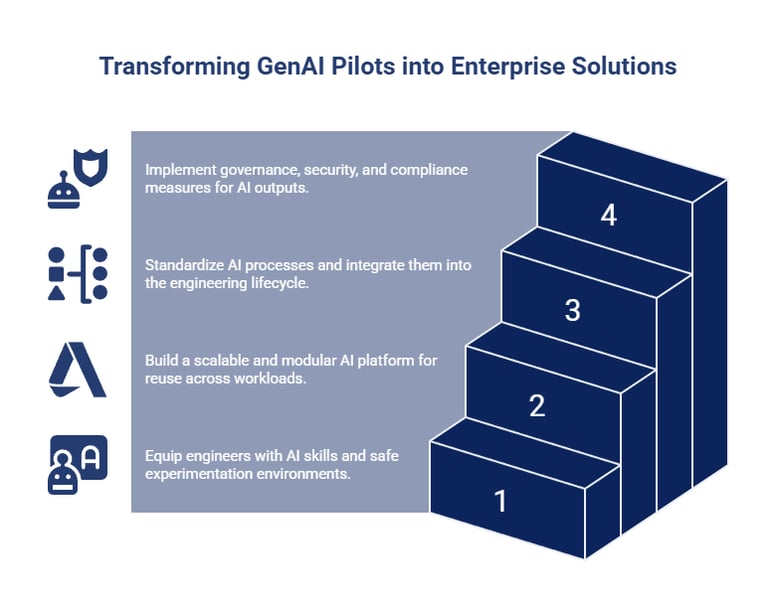

How to Turn GenAI Experiments Into Enterprise-Grade Engineering Solutions?

Transforming Generative AI pilots into production-ready engineering solutions requires more than clever prototypes. It demands cultural readiness, architectural rigour, governed workflows, and a foundation of responsible AI practices. Here are 4 key steps you need to follow if you want to turn your GenAI experiments into enterprise-grade engineering solutions:

Step 1: Support Engineering Teams Through Change Management

The biggest barrier to GenAI adoption in engineering is capability. Engineering teams, while highly skilled in systems thinking and technical design, often lack expertise in prompt engineering and understanding how GenAI reasoning differs from deterministic software. As a result, many organisations introduce AI copilots and model-assist tools without structured enablement. This often leads to low adoption and scepticism.

What you must do:

- Institutionalise AI skills: Create GenAI champions and run focused capability programs so AI proficiency becomes a core engineering skill.

- Enable safe experimentation: Provide sandbox environments for hands-on testing without risking production systems.

- Make engineers AI co-creators: Move teams from passive tool users to designers and deployers of GenAI solutions at scale.

Step 2: Design a Production-Ready Generative AI Architecture

A scalable GenAI foundation cannot be an isolated proof-of-concept. It must evolve beyond a single-app implementation toward a cohesive enterprise GenAI platform. Production architectures require a clear separation of core layers like model orchestration, retrieval mechanisms, guardrails, observability, and integration pipelines. This ensures that AI services can be reused across multiple engineering workloads instead of being rebuilt for every project.

What you must do:

- Build a platform, not a PoC: Move from isolated GenAI experiments to an enterprise-wide architecture with reusable services and shared components.

- Modularise core layers: Separate model orchestration, retrieval, guardrails, observability, and integration pipelines to scale across engineering workloads without rework.

- Design for evolution: Ensure the architecture supports model swaps and consistent performance across CAD, CAE, documentation, and code systems.

Step 3: Build Governed, Repeatable AI Workflows

Engineering organisations treat GenAI like an ad-hoc assistant rather than a governed capability. Without standardised prompts and automated evaluation, outputs become unpredictable and unusable at scale. This prevents GenAI from being audited or reused across engineering workloads.

What you must do:

- Standardise AI workflows: Replace one-off prompting with reusable, approved templates and governed patterns. This will ensure consistency and traceability across engineering tasks.

- Integrate into the engineering lifecycle: Embed GenAI into documentation, design reviews, testing, and code processes with human-in-the-loop controls where judgment matters.

- Automate evaluation and feedback: Implement pipelines that continuously test AI outputs for accuracy, safety, compliance, and bias. This creates versioned workflows that improve over time and scale reliably.

Step 4: Strengthen Responsible AI, Security, and Compliance

Once GenAI touches engineering data and influences physical designs, the risk surface expands dramatically. Without enforceable governance and regulatory alignment, AI outputs may be innovative but unverifiable or non-compliant. This makes them impossible to trust or deploy in production.

What you must do:

- Enforce governance and access controls: Implement audit trails and secure model/vector-store access to protect IP and sensitive engineering data.

- Align with regulations and standards: Embed compliance with industry codes, safety requirements, export restrictions, and engineering standards directly into GenAI workflows.

- Ensure traceability and explainability: Maintain model lineage, decision logs, and explainable outputs so AI-assisted engineering decisions can be inspected and certified.

How Brysa Helps Engineering Teams Operationalise GenAI?

Most engineering organisations don’t struggle with ideas. They struggle with execution. Brysa bridges this gap by helping your engineering teams move beyond isolated GenAI experiments and embed AI-enabled capabilities directly into their operational and design workflows. As a Salesforce specialist for engineering-driven enterprises, we combine deep platform expertise with an understanding of industrial processes and complex product lifecycles. Instead of offering yet another AI proof of concept through our consulting services, we build an enterprise backbone for you that makes GenAI secure and scalable across the entire engineering ecosystem.

Here is our approach:

We align GenAI initiatives with your existing Salesforce investments. Many engineering companies already run critical workflows on Salesforce. Brysa enhances these touchpoints with GenAI-enabled features such as engineering document automation, intelligent design handovers, safety protocol summarisation, and insights from sensor or field data.

By keeping AI tightly integrated into the systems engineers already use, we eliminate tool fatigue and fragmented pilots that derail most GenAI programs. The result is not just better prototypes, but AI-powered engineering workflows ready for industrial deployment.

Ready to turn your AI aspirations into production-grade outcomes? Get in touch with us now.